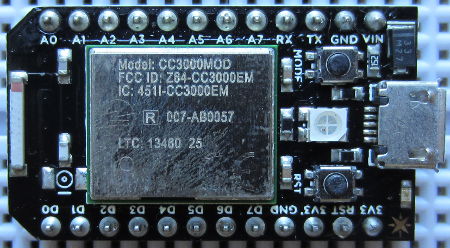

In our blog post Spark Core Introduction and First Impressions we introduced Spark Core – a Wi-Fi enabled Internet of Things device which can be programmed like an Arduino and accessed via the internet.

Of most interest to us at REUK is using Spark Core to enhance our range of solar water heating controller adding datalogging and internet functionality. Therefore we want to access temperature readings from DS18B20 digital temperature sensors of the type used in our 2014 Solar Water Heating Pump Controller connected to Spark Core.

As a test we connected a DS18B20 temperature sensor to the Spark Core. Pin 1 of the sensor connects to GND, Pin 3 to 3.3V, and Pin 2 to a digital pin on Spark Core – we randomly chose D2. Finally we connected a 4K7 resistor across Pins 1 and 3 of the sensor and entered the following code via the Spark IDE to flash to the Spark Core:

#include "spark-dallas-temperature/spark-dallas-temperature.h"

#include "OneWire/OneWire.h"

#define ONE_WIRE_BUS 2

OneWire oneWire(ONE_WIRE_BUS);

DallasTemperature sensor(&oneWire);

float temperature = 1.0;

char myStr[10];

void setup() {

Spark.variable("read", &myStr, STRING);

sensor.begin();

sensor.setResolution(12);

}

void loop() {

sensor.requestTemperatures();

temperature= sensor.getTempCByIndex(0);

sprintf(myStr,"%.3f",temperature);

}

At the time of writing (August 2014) it is not possible to have a Spark.variable which is a float – the code just will not compile – so the temperature measurement from the sensor (which is a float/double) must either be saved as an integer (losing accuracy due to rounding) or be converted into a string (which is what we did above to three decimal places with the sprintf function) so it can be accessed remotely.

We then wrote the following Python script on an internet connected Raspberry Pi to grab the temperature measurement once every minute and to append it to a text file for datalogging and later analysis:

#!/usr/bin/python

import urllib2

import json

import time

var = 1

while var == 1:

response = urllib2.urlopen('https://api.spark.io/v1/devices/YOURDEVICEID/read?access_token=YOURACCESSTOKEN')

html = response.read()

reading = json.loads(html)

temperature = reading['result']

with open("core-temp-log.txt", "a") as myfile:

myfile.write(temperature)

myfile.write('\n')

myfile.close();

time.sleep(60)

The string variable read is the string conversion of the value read in by the temperature sensor.

Having got the Spark Core successfully reading data from a DS18B20 it is possible to fully replicate our Arduino based solar water heating pump controllers with the added benefit of internet connectivity and effective remote datalogging.

See our Raspberry Pi related articles Publish Temperature Sensor Readings to Twitter and Temperature Logger with Xively to find out how to automatically publish your collected data to the internet – either as a Twitter feed or with Xively as an online datalogger with graph plotting etc.